# delete the 23rd line from ACCOUNT_LOG.sql and save, and create a backup file named ACCOUNT_LOG.sql.bak # it takes about 20 seconds to update a file with 2Gb sed -i.bak -e '23d' ACCOUNT_LOG.sql |

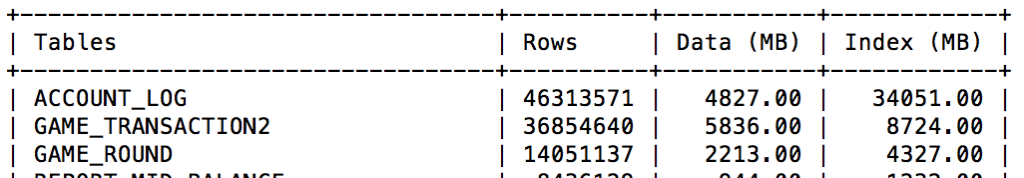

How to get rows, size of data and index from information_schema.TABLES

Install and configure Apple Time Machine service on Solaris

I installed Netatalk on Solaris to backup my Macs, it works perfectly!

Here is the steps,

1. install bdb, this is needed by netatalk

cd build_unix ../dist/configure make make install |

2. install nettalk by running configure, make, make install

3. prepare disk. create the filesystem in ZFS and mount

zfs create -o mountpoint=/sp/timemachine sp/timemachine zfs create -o mountpoint=/sp/timemachine/yang sp/timemachine/yang |

4. configure Netatalk: afp.conf

; Netatalk 3.x configuration file [Global] hostname = Solar mimic model = RackMac ; [Homes] ; [My AFP Volume] [yang] path = /sp/timemachine/yang valid users = yang time machine = yes |

5. enable service

svcadm enable multicast svcadm enable svc:/system/avahi-bridge-dsd:default svcadm enable netatalk |

Import ZFS storage pool

I had one ZFS storage pool (mirror) on my old home server, which hosts my all photos, code, and some movies. I recently bought a new HP Gen8 Microserver, installed Solaris 11 on it, now I need to import the ZFS pool to my new Gen8 server.

The first thing is simply, just remove the disks from the old server, and attach them to the Gen8, then in Solaris on Gen 8:

1. Check the current zfs storage by using zfs list

root@solar:/# zfs list NAME USED AVAIL REFER MOUNTPOINT rpool 9.99G 106G 4.64M /rpool rpool/ROOT 2.78G 106G 31K legacy rpool/ROOT/solaris 2.78G 106G 2.47G / rpool/ROOT/solaris/var 309M 106G 307M /var rpool/VARSHARE 2.53M 106G 2.44M /var/share rpool/VARSHARE/pkg 63K 106G 32K /var/share/pkg rpool/VARSHARE/pkg/repositories 31K 106G 31K /var/share/pkg/repositories rpool/VARSHARE/zones 31K 106G 31K /system/zones rpool/dump 5.14G 106G 4.98G - rpool/export 98K 106G 32K /export rpool/export/home 66K 106G 32K /export/home rpool/export/home/yang 34K 106G 34K /export/home/yang rpool/swap 2.06G 106G 2.00G - |

2. list all ZFS storage pool which can be imported by using zpool import without any pool name

root@solar:/# zpool import pool: sp id: 4536828612121004016 state: ONLINE status: The pool is formatted using an older on-disk version. action: The pool can be imported using its name or numeric identifier, though some features will not be available without an explicit 'zpool upgrade'. config: sp ONLINE mirror-0 ONLINE c3t0d0 ONLINE c3t1d0 ONLINE |

Here we can see a ZFS pool named ‘sp’ can be imported. It is a RAID 1 pool (mirror).

3. before importing, create folder to mount the pool which will be imported

root@solar:/# mkdir /sp |

4. import the pool by using zpool import #POOL_NAME

root@solar:/# zpool import sp |

5. check the imported pool and ZFS

root@solar:/sp/important/photo/All# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT rpool 118G 9.77G 108G 8% 1.00x ONLINE - sp 2.72T 284G 2.44T 10% 1.00x ONLINE - root@solar:/sp/important/photo/All# zfs list NAME USED AVAIL REFER MOUNTPOINT ... sp 284G 2.40T 152K /sp sp/important 284G 2.40T 168K /sp/important sp/important/code 63.3G 2.40T 63.3G /sp/important/code sp/important/movie 31.4G 2.40T 31.4G /sp/important/movie sp/important/photo 190G 2.40T 190G /sp/important/photo |

Enable SMB share on Solaris 11

# 1. enable smb share zfs set share=name=iMovie,path=/sp/important/movie,prot=smb sp/important/movie zfs set sharesmb=on sp/important/movie # 2. start server # svcadm enable network/smb/server # svcadm enable network/smb/client svcadm enable -r smb/server # 3. enable user smbadm enable-user user1 # 4. vi /etc/pam.d/other password required pam_smb_passwd.so.1 nowarn # 5. change password for user1 passwd user1 |

How to remove share name for smb on Solaris 11

Created several share name on same zfs system, here is the way to remove

root@solar:/etc/pam.d# zfs get share sp/important/movie NAME PROPERTY VALUE SOURCE sp/important/movie share name=none,path=/sp/important/movie,prot=smb local sp/important/movie share name=iMovie,path=/sp/important/movie,prot=smb local sp/important/movie share name=movie,path=/sp/important/movie,prot=smb local root@solar:/etc/pam.d# share -F smb -A none /sp/important/movie - iMovie /sp/important/movie - movie /sp/important/movie - # -------------- DELETE -------------------- root@solar:/etc/pam.d# unshare -F smb movie root@solar:/etc/pam.d# unshare -F smb none root@solar:/etc/pam.d# share -F smb -A iMovie /sp/important/movie - root@solar:/etc/pam.d# zfs get share sp/important/movie NAME PROPERTY VALUE SOURCE sp/important/movie share name=iMovie,path=/sp/important/movie,prot=smb local |

BigDecimal.divide: Non-terminating decimal expansion

java.lang.ArithmeticException: Non-terminating decimal expansion; no exact representable decimal result.

在使用BigDecimal进行除法运算时,在除不尽的情况下,Java会抛出上述异常。

解决办法是在调用divide方法的另一个实现,指定精度及舍入模式

BigDecimal.TEN.divide(new BigDecimal('3'), 2, RoundingMode.HALF_UP) |

Performance issue when updating/inserting massive data into InnoDB

innodb_flush_log_at_trx_commit - 在MySQL 5.5中,该值默认为1,此时在每次事务提交时,MySQL都会将log buffer中的内容写到日志文件,并将日志文件刷新到磁盘上去。所以如果磁盘不是SSD,在进行批量更新、插入的时候性能会非常差。

今天在Stage上生成所有的报表数据,其中一张源表大概有4千万行,结果运行速度非常慢,生成一天的报表数据要花上好几个小时。生成报表的存储过程需要操作(插入、更新)十来张表,但在存储过程中,我们却没有使用事务,也就是说,每一次insert/update后,MySQL都会将改动写入磁盘,而stage仅仅是一般的SATA硬盘,自然性能就会非常差。

解决的办法是改进存储过程,在其中加入事务控制,大概每1000条报表记录提交一次。测试后速度大大提高,生成一天的数据仅需要1到2分钟。

Send email via openssl command line

root@localhost$ openssl s_client -crlf -quiet -starttls smtp -connect mail.server.com:465 depth=0 OU = GT85507400, OU = See www.rapidssl.com/resources/cps (c)14, OU = Domain Control Validated - RapidSSL(R), CN = *.name.com verify ... 250 HELP > EHLO client.com 250-mail.server.com Hello client.com [192.168.1.2] 250-SIZE 52428800 250-8BITMIME 250-PIPELINING 250-AUTH PLAIN LOGIN 250 HELP > AUTH LOGIN 334 VXNlcm5hbWU6 > [base64 code of username] 334 UGFzc3dvcmQ6 > [base64 code of password] 235 Authentication succeeded > MAIL FROM:support@server.com 250 OK > RCPT TO:yang@client.com 250 Accepted > DATA 354 Enter message, ending with "." on a line by itself > Subject:mail sending with mail.server.com > This is a test mail from mail.server.com, sent by openssl. > . 250 OK id=1YGmlz-0049vF-0V > quit 221 mail.server.com closing connection |

以 > 开始的行是客户端需要输入的命令。

在Ubuntu中更改时区

上次按网上说的用 拷贝文件的方式改就吃过亏了,cron应该是读取/etc/timezone来进行调度的。在Ubunut上正确修改时区的命令应该是

dpkg-reconfigure tzdata |

更改后

fp@fp2:~$ cat /etc/localtime TZif2UTCTZif2UTC UTC0 fp@fp2:~$ cat /etc/timezone Etc/UTC fp@fp2:~$ date Sun Sep 7 11:13:52 UTC 2014 |